Hadoop Cluster

In Terrascope, we harness the power of Hadoop, Spark, and YARN to manage and process our extensive Earth observation data efficiently. Our platform leverages these technologies to ensure robust data handling, fast processing, and optimal resource management.

Hadoop

At the core of our data storage system is Hadoop’s HDFS (Hadoop Distributed File System). It enables us to distribute vast amounts of satellite imagery across multiple nodes, ensuring reliability and accessibility.

Spark

Spark integrates seamlessly with Hadoop, running on top of Hadoop YARN within Terrascope. This integration enables us to utilize Spark’s potent batch and stream processing features to tackle various big data challenges.

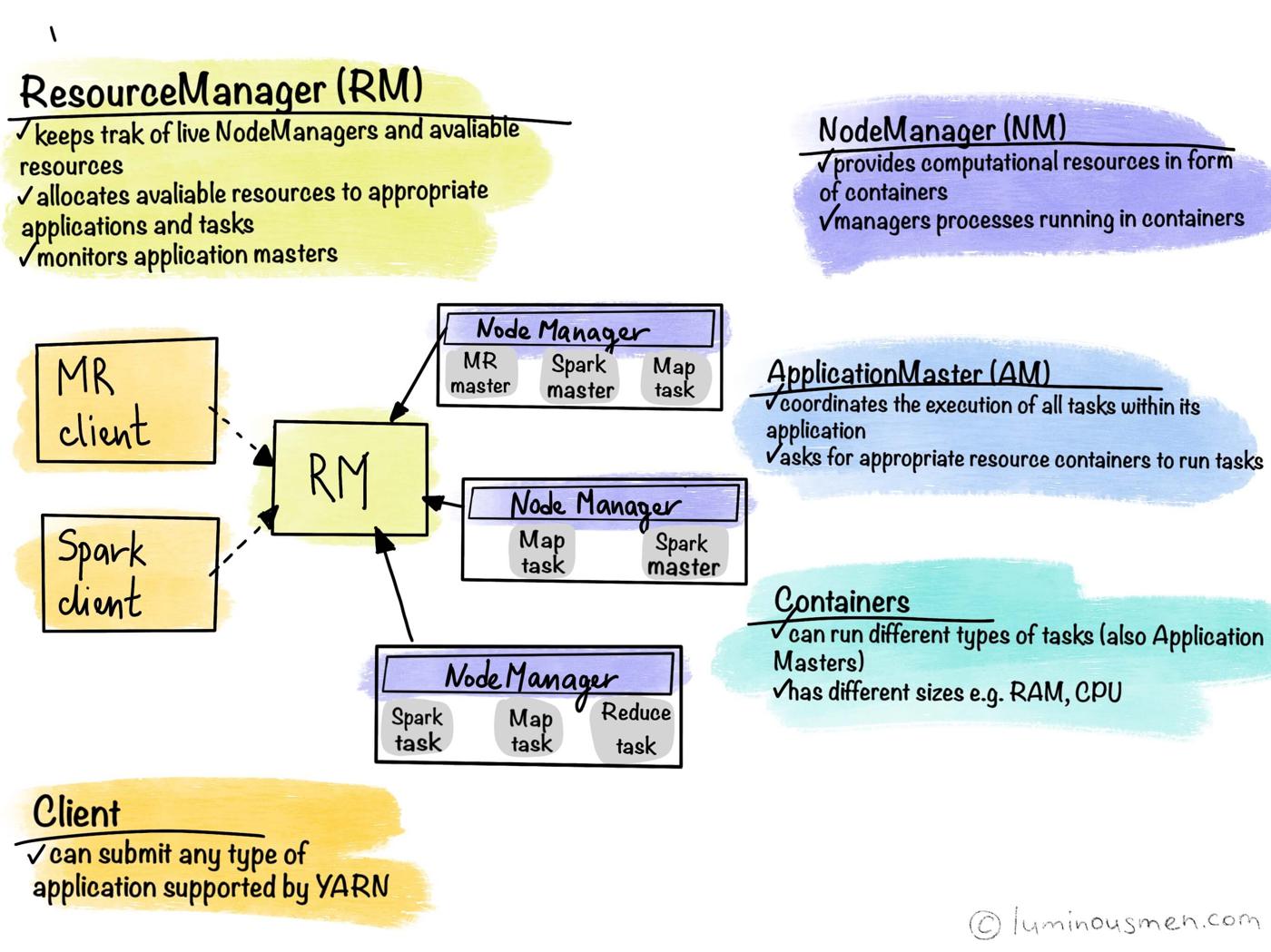

YARN

YARN (Yet Another Resource Negotiator) plays a vital role in our cluster management by overseeing resource allocation and task scheduling. Similar to an operating system, YARN manages our computing cluster’s storage (via HDFS) and processing resources. This ensures that computational tasks are allocated and scheduled optimally, maintaining the efficiency and performance of our platform. By leveraging YARN, we can dynamically manage resources, improving the overall utilisation and throughput of our data processing workflows.

More details on the resource management by YARN can be found here.

Additional Resources

Various guides and best practices are available for users to start or learn more about setting up a Spark job, managing resources, and accessing logs. These resources cover topics such as: These resources cover various topics, including:

Start a Spark Job using Spark 3:

To speed up processing in Terrascope, it is recommended that tasks be distributed using the Apache Spark framework. The cluster runs Spark version 2.3.2, with newer versions available upon request. Spark is pre-installed on the Virtual Machine, allowing

spark-submitto run from the command line. Theyarn-clustermode must be used for Hadoop cluster jobs, and authentication with Kerberos is required by runningkiniton the command line. Commands likeklistandkdestroyhelp manage Kerberos authentication status. For Python 3.8 or above, Spark 3 must be used due to compatibility issues. A Python Spark example to help users get started.Manage Spark resources:

Optimizing Spark resource management is crucial for efficient data processing. This guide provides insights into configuring Spark resources effectively, ensuring optimal performance and resource utilization. For an in-depth understanding of Spark resource management, refer to the Spark Resource Management guide.

Advanced Kerberos:

To use Hadoop services, including Spark, YARN, and HDFS, users must have a valid Ticket Granting Ticket (TGT). This page summarises insights on Kerberos.

Access Spark Logs:

Accessing Spark logs is essential for monitoring and troubleshooting Spark jobs. Refer to the Accessing Spark Logs section for detailed instructions on accessing Spark logs.

Using Docker on Hadoop:

For users interested in running Docker on Hadoop, this page provides detailed instructions on setting up Docker on Hadoop. This could be useful when the user wants to use a spark or Python version that isn’t available on the cluster.

Manage permissions and ownership:

Managing file permissions and ownership is crucial for ensuring data security and integrity. Refer to the Managing Spark file permission and ownership page for insights on managing Spark file permissions and ownership.

Spark best practices:

Additionally, to have a better understanding of Spark best practices, it is recommended to refer to the Spark best practices guide. This guide provides insights into optimizing Spark workflows and enhancing data processing efficiency.